A dream of AI-DLC

The Genesis Engine: A Manifesto for the AI-Driven Development Lifecycle

Introduction: The Old World Is Dead

Saturday evening, September 20, 2025.

I’m thinking about how we build.

For two decades we refined rituals—sprints, stand-ups, retrospectives—built to manage the slow, methodical act of human hands typing code. We built a cathedral with masonry tools.

With AI, the situation has changed.

We now wield a Genesis Engine, yet we still run it with the same two-week committee ritual. That mismatch is untenable.

This isn’t about bolting AI onto a JIRA board—the timid “AI-powered” world of incrementalism that misunderstands the scale of change. It’s about melting the board down and forging a new way of working from first principles.

This is a manifesto for an AI-driven world where processes, tools, and architectures revolve around machine-speed creation.

This is the AI-Driven Development Lifecycle (AI-DLC).

Part I: From Why to What - The Strategic Framework

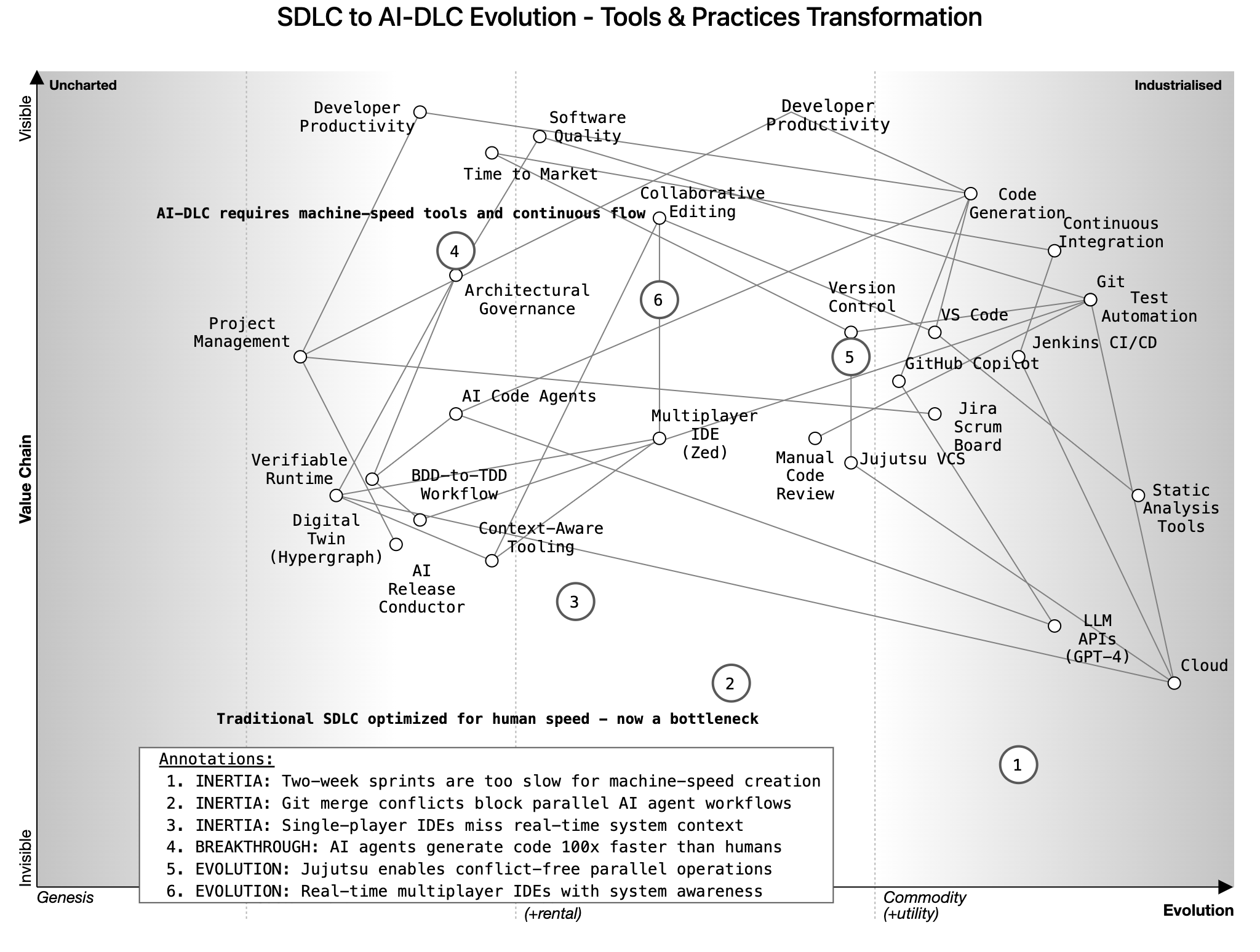

Know Your Battlefield: Wardley Mapping First

The act of creation in our industry has been a form of alchemy, a dark art guided by gut feelings, technical fads, and the gravitational pull of the Highest Paid Person’s Opinion.

We have been driving at a hundred miles an hour, at night, with no headlights, celebrating our speed while utterly blind to the cliff edge just ahead.

Before we unleash a Genesis Engine that builds at the speed of thought, we must understand the landscape.

This is the foundational act of engineering. Not an option.

How?

Wardley Mapping.

It’s a discipline that forces the strategic conversation we’ve avoided for decades.

To begin, we need to anchor our work to a person, not to a technology.

- Who are we serving? We anchor our entire world to the user. Their needs are the sun around which our system must orbit. We name them. We understand them.

- What do they actually need? We define the value they seek, the job they are trying to do. Not the feature we want to build, but the fundamental need they are trying to satisfy.

- What must we build to satisfy that need? This is where the map comes to life. We chart the entire value chain, a cascade of dependencies from the user’s need down to the commodity electricity that powers the servers. Each component is a node on the map, its position not arbitrary, but placed on an evolutionary axis — from chaotic and custom-built to orderly and commoditized.

The resulting map is our situational awareness — our shared understanding of the battlefield: where to attack, what to build, what to buy, and what to leave to others. The strategic “why” that will guide the tactical “how.”

Thinking in Public: The AI-Augmented RFD Process

With the map in hand, we have the strategy. It’s time to design the system.

A series of endless meetings that drain the life out of a team, culminating in a dense design document that is already obsolete the moment it’s saved as a PDF, is replaced with a new philosophy: thinking in public, asynchronously, at a speed that matches our new reality. We adapt the rigorous RFD (Request for Discussion) process, pioneered by Oxide Computer, and supercharge it with AI.

Here’s the flow:

- The Prompt: An architect takes the clarity from the Wardley Map and crafts the prompt. This is not a casual question; it is a carefully constructed brief, a distillation of intent, constraints, and desired outcomes. This is the human providing the soul of the machine.

- AI Apprentice: This brief is fed into an AI workflow, an orchestrated chain of AI agents designed for architectural synthesis. The first agent generates the initial draft of the RFD, API contracts, justified technology choices, and security considerations. This isn’t a sketch, but an 80% solution, generated and committed to version control in seconds.

- The Asynchronous Gauntlet: The RFD is now open for discussion. No meetings. The team descends upon the document asynchronously: they challenge assumptions, propose alternatives, and demand clarifications directly in the document’s review tools. This is the crucible where the design is hardened.

- The AI Synthesizer: An AI agent becomes a subject, an actor: it synthesizes the disparate threads of conversation, identifies points of emerging consensus, and flags irreconcilable conflicts for human attention. It can take a proposed alternative, run a simulation against the Digital Twin — our live, hypergraph model of the system — and report back with performance and cost implications. It generates a revised version of the RFD, complete with a changelog explaining how it incorporated the human feedback. This loop — human debate, AI synthesis, revised proposal — can cycle multiple times in a single afternoon.

- The Human Gate: Once the discussion stabilizes and a clear, robust design has emerged, the process halts. The lead architect reviews the final state of the RFD and makes the call. They move its status to “Published.” This is the moment of accountability.

Upon publication, the RFD is transformed. It ceases to be a document and becomes a living architecture — a machine-readable constitution, its contracts and constraints fed directly into our Verifiable Runtime to govern the creation that is to come.

Part II: The Engine of Creation — A New Technical Reality

The Living Blueprint: The Hypergraph of Functions

How do we govern, understand, and safely evolve a system that is being built by a legion of autonomous agents? A traditional codebase is a dead artifact, a static blueprint of a city that’s already been built, telling you nothing of the living, breathing reality of its traffic, energy consumption, and structural stresses. To engineer a living system, you need a living model.

This is the moment the hypergraph of functions enters the scene.

The foundational shift: we move from a collection of inert files to a live, interconnected graph. Every function, every service, every API schema, every data dependency is a node. The relationships between them — what calls what, what data flows where, what depends on what — are the edges. A hypergraph takes this a step further, allowing edges to connect many nodes at once, perfectly modeling the complex, many-to-many relationships that define modern software.

This is a queryable, computable, ever-evolving representation of the entire system. It is the engine that powers our context-aware tools, runtime, and entire understanding — the Digital Twin.

How do you have a twin of a system that doesn’t yet exist?

You don’t. The Digital Twin is not created perfectly formed in a vacuum. It co-evolves with the system, starting its life from intent, not from code.

- The Seed Crystal: The genesis of the hypergraph is the published RFD. The moment an RFD is approved, its machine-readable contracts — API definitions, data schemas, service boundaries — are used to generate the v0.1 hypergraph. This initial graph is a blueprint of the intended system. It is a model of our architectural promises before a single line of application code has been written.

- From Intent to Reality: As the first AI agents begin their work, driven by our TDD process, the Verifiable Runtime plays a dual role. It validates the generated code against the tests. Upon successful validation, it uses that new code to enrich the hypergraph. A skeletal node representing a POST /users endpoint from the RFD is now replaced and fleshed out with the living function that implements it, complete with its dependencies and performance characteristics.

The Digital Twin thus grows organically. It evolves from a low-fidelity model of pure intent into a high-fidelity model of physical reality, always staying in lockstep with the deployed code.

This living model is what makes true engineering possible. The architectural rules from the RFD are not suggestions in a document; they are encoded as constraints within the graph itself. An AI-generated function that attempts to make an illegal connection — for example, having the payment service directly call a function deep inside the user profile service — is structurally impossible. It’s a violation of the system’s physics.

This is how we maintain architectural integrity at scale and speed.

Forging the Tools of Engineering: Context-Aware IDEs

For decades, we’ve called ourselves “software engineers,” but our tools have often been little more than sophisticated text editors. An engineer in the physical world works with instruments that understand the laws of physics, material stress, and system tolerances. A civil engineer’s CAD software doesn’t just let them draw lines; it simulates the load-bearing capacity of a beam. Our tools have been largely ignorant of the system our code lives in.

They check syntax, but they don’t understand intent. They are passive canvases.

The AI-DLC demands we abandon these primitive instruments. It requires a new class of tooling that transforms coding from a craft into a true engineering discipline: context-aware tools.

The IDE should be more than a text editor with plugins; it must become a foundry, a cockpit where the human engineer directs the creation of a complex system, armed with instruments that are connected to the hypergraph’s reality. The tool is aware of the entire system as a whole, in real time.

It has profound, practical implications for the daily workflow:

- Intelligent TDD Assistance: When a human or an AI agent writes a BDD scenario or a failing test, the tool’s role is not just to auto-complete a line of code. Because it is connected to the hypergraph, the IDE understands the purpose of that test and the contract that needs to be fulfilled. Its code generation suggestions are not based on statistical probability gleaned from open-source code; they are laser-focused on the single goal of making that specific test pass while adhering to the established architectural patterns of your system.

- Real-time Architectural Compliance: As an engineer creates a new function,

the tool acts as a tireless architectural steward. It provides immediate,

non-intrusive feedback as a deep, systemic check against the living

architecture.

“Warning: This function creates a cyclical dependency between the AuthService and the BillingService, violating the acyclic graph principle defined in RFD-0237.”

- Instant Performance Simulation: Before a change is ever committed, the

engineer can understand its systemic impact. By leveraging the Digital Twin,

the IDE can answer critical questions on the fly.

“Simulating this new database query on the Digital Twin predicts a p99 latency of 300 ms under expected load, which exceeds the 100 ms NFR for this service. Consider adding an index to the ‘users’ table or fetching this data from the cache.”

This is the difference between being a scribe and being an engineer. The tools stop being passive and become active, opinionated partners in the engineering process. They are imbued with the system’s constitution and a live model of its reality, allowing the human at the helm to make informed, strategic decisions instead of just writing code and hoping it works. This is how we build with intent, precision, and foresight.

The Technical Immune System: Verifiable by Design

Gates are passive. They are checkpoints you pass through.

A system built at machine speed requires more than a simple firewall or a set of quality gates. We need an adaptive immune system that inherently understands what belongs and what must be rejected. This system ensures that only healthy, correct, and intentional code can ever thrive. It operates on a clear, unbroken chain of verification, beginning with human intent, not code.

- Anchoring to Intent with BDD: The process starts with a conversation,

captured as a Behavior-Driven Development (BDD) scenario. The human “Mission

Architect” defines the desired outcome in a simple, structured language that

bridges the gap between business logic and technical implementation:

Given a customer’s cart contains an item with limited stock, when they proceed to checkout, then the system must reserve the item in inventory. This scenario is the “soul” of the feature. It is the unambiguous, human-readable contract of what we are building, providing the intent that will guide the entire creation process.

- From Intent to Proof with AI-Augmented TDD: The BDD contract is the first input for our AI agents. The initial agent’s sole purpose is to act as a skeptic. It interprets the BDD scenario. Its primary responsibility is to generate a comprehensive suite of Test-Driven Development (TDD) tests that will serve as the rigorous scaffolding for the code. It writes the failing unit, integration, and end-to-end tests that mathematically prove the BDD contract is not yet met.

- Closing the Loop with Code Generation: Only once this “scaffolding of

proof” exists does a second agent (or the same one) get tasked with writing

the application code. The goal is singular: make the failing tests pass.

This inverts the traditional model. Instead of code creation and testing, code is generated as the logical solution to a pre-existing, comprehensive proof of correctness.

This entire TDD cycle happens locally, on a developer’s machine, against the Digital Twin. The feedback loop is measured in seconds, not minutes.

Finally, all this code flows toward the Verifiable Runtime. Think of it as a T-cell in our system’s bloodstream. It is the final, non-negotiable checkpoint. It receives the proposed code change along with its entire chain of provenance: the original BDD scenario and its TDD scaffolding. The runtime’s job is to:

- Execute the Proof: It runs the full gauntlet of tests within a secure sandbox.

- Verify the Physics: It checks the proposed change against the hypergraph, ensuring it adheres to the structural rules and constraints of the “living architecture.” A change that passes its tests but violates an architectural principle is treated as a dangerous mutation and is rejected.

Only code that is born from a clear behavior, proven correct by its tests, and compliant with the system’s fundamental architecture is allowed to be integrated. This is how we ensure that what we build is technically correct and is what we intended.

Beyond Merge Conflicts: The Fluidity of Jujutsu

We have designed a system capable of generating, verifying, and integrating thousands of correct changes in parallel. A swarm of AI agents, guided by human conductors, works on every part of the codebase simultaneously. And now, this entire high-velocity ecosystem runs headfirst into a wall built in 2005: git merge.

The entire model of branching and manual conflict resolution was designed for teams of humans operating at human speed. It treats history as a brittle, linear narrative. When two developers — or two thousand AI agents — branch from the same point and make conflicting changes, the second one to merge is punished with “merge hell.” This forces a sequential, lockstep integration process that is the absolute antithesis of the parallel, swarming behavior our lifecycle depends on. It is a fundamental roadblock.

To unlock the potential of the AI-DLC, we must move away from the rigid, history-based model of Git toward the conflict-free, operation-based world pioneered by systems like Jujutsu.

Jujutsu approaches this problem from a different angle. It treats every change not as a commit shackled to a branch, but as an independent, commutative operation. Think of it less like a tree with rigid branches that must be painstakingly grafted back together, and more like a bag of Lego bricks. Each change is a self-contained, validated brick. The final state of the codebase is the result of applying all the bricks in the bag. The order you add them doesn’t matter nearly as much, and conflicts are resolved automatically by default — the last writer wins — with a clear, auditable record of the overwritten change.

This has profound implications for the AI-DLC:

- Frictionless Parallelism: An AI agent refactoring the logging library doesn’t need to know or care that another agent is simultaneously hardening the authentication service. Their changes are independent operations that don’t block each other. They simply add their validated bricks to the bag. The Verifiable Runtime doesn’t perform a thousand merges; it applies a thousand validated operations to the hypergraph.

- Logical, Actionable Conflict Resolution: When two agents do modify the

same function, the conflict is no longer a scary, red-text error in a

terminal. The system can often resolve it logically based on predefined rules.

If human intervention is required, the conflict is presented to the human

conductor not as a messy diff, but as a clear choice between two competing,

validated operations:

“Agent A’s change renames this function to calculate_final_price. Agent B’s change alters its signature to accept a discount_code. Which operation should take precedence, or should a new mission be created to reconcile them?”

By shifting from a rigid, history-based model to a flexible, operation-based one, we remove the final process bottleneck. We create a system where massive, parallel collaboration is the default state, not a constant source of friction.

Part III: The Living System - Execution & Operation

We have designed a strategic framework for defining intent and an engine of creation capable of building correct software at a staggering velocity. But software that isn’t running in production is just a theoretical exercise. The final, and most critical, part of the lifecycle is how this system comes to life. How it deploys. How it understands itself. This is where the machine truly takes over.

The Native Habitat: Microservices & Serverless

A swarm of AI agents building a monolithic application is like a colony of ants sculpting a massive boulder. Not only inefficient; it’s a categorical mistake. Every agent’s action interferes with every other’s. The sheer cognitive load of the entire system is too vast, the internal connections too tangled, the risk of a single change too catastrophic. The AI-DLC is a process defined by massive parallelism, radical decoupling, and continuous, atomic change. To attempt it on a monolithic architecture is to build a jet engine and bolt it to a horse cart.

The architecture of the AI-DLC is a consequence.

It is the physical environment that must exist for this new organism to live.

This native habitat is a combination of microservices and serverless functions.

The Monolith as a Cage

Before understanding the solution, we must respect the problem. A monolith actively resists the AI-DLC. Its tightly coupled nature creates a stateful entanglement where a change in one module has unpredictable, cascading effects on another. For our hypergraph, this would be a nightmare—a dense, unreadable knot of connections where the blast radius of any change is, for all practical purposes, the entire system. This kills the local, high-speed verification cycle of the Digital Twin because the “local” context is the whole application. Furthermore, it enforces a deployment lockstep. The entire boulder must be moved at once, meaning a single failing mutation from one AI agent holds back thousands of other, perfectly valid changes. The monolith is a cage that enforces a slow, sequential, high-risk process.

Microservices: Bounded Worlds for AI Agents

Microservices are the first, crucial act of liberation. They shatter the monolith’s boulder into manageable, well-defined rocks. Each service is an implementation of a Bounded Context—a universe unto itself, with a clear purpose, its own data, and an explicit API contract for how it interacts with the outside world.

This is the architectural breakthrough that makes the AI-DLC possible. A bounded context is a problem space constrained enough for an AI swarm to effectively reason about.

- Isolated Missions: A mission to “improve payment fraud detection” is scoped entirely to the payment-processing service. The AI agents assigned to this mission operate within a world where the rules are known, the data model is contained, and the external dependencies are stable contracts.

- Tractable Hypergraphs: The hypergraph of a single microservice is clean and understandable. The Verifiable Runtime can analyze the impact of a change within this bounded world with a high degree of certainty.

- Autonomous Evolution: It enables a form of Conway’s Law for AI. A dedicated swarm can own a service, from its RFD to its deployment and observation. A high-churn service like recommendations can evolve hundreds of times a day without destabilizing a slow-moving, critical service like authentication.

Serverless: The Atomic Unit of Verifiable Creation

If microservices are the bounded worlds, then serverless functions are the atoms that constitute them. They are the ultimate expression of granularity, the perfect “Lego brick” for our AI agents.

The superpower of serverless is statelessness. A stateless function is a pure, mathematical machine. It takes inputs, performs logic, and produces outputs. It has no memory, no hidden state, no lingering side effects. This makes it a dream for automated verification. The Verifiable Runtime can test a serverless function with a set of inputs and be 100% certain of its output. There are no hidden variables. A change is either correct or incorrect; there is no ambiguity.

Furthermore, the inherently event-driven nature of serverless architecture aligns perfectly with the swarming model. A “new user signed up” event doesn’t call a single, monolithic function. It triggers a decentralized cascade of discrete serverless functions: one to create the user profile, another to send a welcome email, a third to update analytics, a fourth to provision a trial. The orchestration is decentralized, just like our development process.

This architectural style is the physical embodiment of our entire philosophy. It provides the discrete, independently testable, and autonomously deployable units that the AI-DLC’s swarms require to function. It is not an arbitrary choice made on a whiteboard; it is the only habitat in which this new, faster form of life can actually evolve.

The Flow: Continuous, Intelligent Deployment

We have designed a system that produces a constant stream of discrete, verifiably correct, and architecturally compliant units of value. The old world’s answer to the question “What do we do with them?” was “Deployment Day.”

This was a human ritual born of fear. Because the batch size of change was enormous and the risk of failure was catastrophic, we surrounded the act of deployment with ceremony: change freezes, late-night war rooms, and a hero on standby to perform the dreaded rollback.

To be clear, the DevOps revolution took a sledgehammer to that old model, and for that, it was essential. It gave us powerful tools of separation. Blue-green deployments decoupled the act of deploying code from the act of releasing it, allowing us to have a new version ready and waiting before making the final switch. Feature flags took this a step further, allowing us to release code to production in a dormant state, to be activated for specific users later. These were the crucial first steps in reducing risk and increasing velocity.

But they were still fundamentally human-driven rituals. A human still decides when to switch the router for a blue-green swap. A human still logs into a dashboard to flip a feature flag. This is a human-speed action in a machine-speed world. When thousands of validated changes are produced per hour, this manual gating becomes the new bottleneck.

The AI-DLC demands the next evolution. In this new world, there is no ceremony. There is no event. There is only a continuous, living flow from creation to reality.

The Verifiable Runtime doesn’t just commit code to a repository; it’s the antechamber to production. Once a change has passed its BDD and TDD gauntlet and been cleared by the architectural immune system of the hypergraph, it is deemed eligible to go live. But it’s not a big-bang push or a manual flag flip. That’s far too crude and violent for a living system.

Instead, we treat deployment like a biological process, managed by a new entity: the AI Release Conductor. This agent takes the validated change and performs a delicate, intelligent, and autonomous rollout.

The process begins as a microscopic canary. The change is exposed to the smallest possible surface area - a single internal user, a fraction of a percent of synthetic traffic. The Conductor then watches. It doesn’t just watch server metrics; it taps directly into the rich, four-layered reality of the Nervous System (our Control Tower). It looks for the subtlest signs of trouble:

- A 5 ms latency increase in a downstream service three hops away?

- A statistically significant uptick in user rage-clicks in the checkout funnel on the other side of the planet?

- A minor violation of the architectural principle of data immutability that only manifests under load?

The Conductor sees it, correlates it, and, if any predefined risk profile is breached, it retracts the change instantly and automatically. The rollback is surgical and immediate, often occurring before a single human is aware there was a problem. The failed mutation is simply discarded, and a new mission is often created for the AI swarm to attempt a different solution.

However, this autonomy does not mean an abdication of responsibility. For the most critical, high-stakes changes - a modification to the billing system’s core logic, for example - the Conductor’s flow can be configured with a crucial checkpoint. The process can be set to pause after a successful internal canary test, awaiting an explicit, logged approval from a designated human lead before proceeding to external users. This creates an auditable “human-in-the-loop” gate where it matters most. We get the speed and safety of autonomous deployment for 99% of changes, while reserving human judgment and accountability for the 1% that could have profound business impact.

If the change proves healthy, the exposure grows. The Conductor gradually widens the aperture - 1% of real users, then 5%, then 20% - continuously, at a pace governed by our defined risk tolerance, not by a human schedule. The system is constantly probing, testing, and confirming the fitness of each new evolutionary step.

This is the death of the version number. There is no “version 2.1.5” that everyone gets at once. There is only a perpetual, self-healing stream of evolution. The codebase is less like a built artifact and more like a coral reef, with thousands of tiny, validated changes constantly and safely adding to its structure, becoming one with the living system.

The Nervous System: Radical Observability

he entire lifecycle we’ve described - intelligent swarms, living architecture, and the continuous, biological flow of deployment - is only possible if we can see. And the way we’ve been seeing is no longer good enough.

For years, we’ve practiced “monitoring.” We collect logs, metrics, and traces. We ask the system if it’s okay by setting up alerts for when known thresholds are breached. This is like asking a patient, “Do you have a fever?” It’s a useful question, but it tells you almost nothing about the complex interplay of their circulatory, nervous, and endocrine systems.

The AI-DLC requires a shift from monitoring to Radical Observability. We need a live MRI, blood panel, and neural activity map of the entire organism and its environment, all synthesized in real time. This is the purpose of the Control Tower.

This is not a dashboard. A dashboard is a collection of charts that a human must interpret. The Control Tower is a synthesis engine. It is the sensory cortex for our city of code, and it watches four distinct layers of reality at once:

- Application Health: The basics are still here - logs, metrics, traces - but they are the raw data, not the final product. An AI constantly analyzes these streams, hunting not just for the “known unknowns” (the alerts we set up), but the “unknown unknowns” - subtle, multifaceted patterns across thousands of signals that indicate emergent failure before it cascades. It’s the difference between an alarm for high CPU and an insight that a 2% increase in database latency combined with a 1% drop in user session length in a specific geographic region predicts a major outage in 45 minutes.

- User Experience: The system directly observes the impact of its own evolution on its inhabitants. The code can be 100% correct according to its tests, but if it harms the user’s experience, it is a failed mutation and must be retracted. The Control Tower ingests real-time user data, watching for frustration signals like rage-clicks, drops in engagement, or negative sentiment in feedback channels. Technical correctness is subordinate to user value.

- Architectural Integrity: Is the city we’ve built matching the blueprint? The Control Tower perpetually compares the deployed reality, as represented in the hypergraph, against the living architecture of the published RFD. It is the guardian of our architectural constitution, alerting us to architectural drift - the slow rot of ad hoc fixes and unapproved connections that turns an elegant design into a slum.

- Lifecycle Health: Finally, we apply the principles of observability to our own process of creation. The Control Tower watches the factory itself. How efficient are our AI agents? Where are the bottlenecks in our RFD loops? Is a particular part of the codebase generating more failed mutations than others? We tune the factory for maximum evolutionary efficiency.

This nervous system is what allows human conductors to manage a system that operates at a speed no human can manually track. We stop watching the individual cars; we watch the flow of traffic, and the AI tells us where the jams are before they happen.

Conclusion: The New Job — From Coder to Conductor

So, we arrive at the central, unspoken question that haunts every discussion about AI: What’s left for us? When an army of intelligent agents can write, test, and deploy code at a speed we can’t possibly match, what is our purpose?

The fear is that we become obsolete.

This is the wrong fear. The right perspective is that the job we’ve been doing — the tedious, line-by-line translation of human requirements into machine syntax — is what becomes obsolete. And this is a liberation. We are being freed from the assembly line to finally become the architects and city planners we were always meant to be. Our job doesn’t disappear; it elevates. We stop being coders and we become conductors.

A conductor does not play every instrument. They don’t need to know the precise fingering for the violin or the embouchure for the trumpet. A conductor’s role is to understand the music as a whole, to shape the performance, and to guide the emergent harmony from a hundred different players. This is our new job.

Let’s break down what this means:

- We Set the Strategy: The conductor chooses the music. Our first and most critical role is to provide the strategic intent. We use tools like Wardley Mapping to understand the landscape, to identify where to play and why. We define the mission. The AI is a brilliant tactical engine, but it needs us to give it a destination worth driving to.

- We Design the System: The conductor shapes the interpretation of the music. We lead the RFD process, synthesizing the best of human and machine intelligence into a coherent architectural vision. We are the final human gatekeepers who say, “Yes, this is the elegant, resilient, and correct way to build this.” We apply our taste, our experience, and our ethical judgment — qualities that cannot be automated.

- We Orchestrate the Builders: The conductor cues the sections and manages the tempo. We use AI orchestration tools to design the workflows that build the system. We are the ones who tell the AI how to build. We design the BDD-to-TDD flows, the validation checks, and the feedback loops. We are the meta-engineers, building the factory that builds the product.

- We Debug the Emergent Whole: When a single violin is out of tune, it’s an easy fix. When a subtle dissonance emerges from the interplay of the entire brass and woodwind sections, only the conductor can hear it. Our most crucial role will be as the system-level debuggers. When a truly novel, complex bug appears from the interaction of a thousand perfectly coded microservices, it is human intuition and a holistic understanding of the system that will find the ghost in the machine.

We stop writing boilerplate and start making decisions. We stop chasing syntax errors and start architecting elegant systems. We stop being bricklayers, focused on the individual stone, and we become the conductors who see and shape the entire cathedral.

It’s a better job. It’s the job we should have been doing all along.